I had the chance to speak at the OpenStorage Summit a couple of weeks ago about RAID-Z (the ZFS implementation of RAID). The talk was an accumulation of blog posts and articles written by me and others as well as quite a bit of new material that’s been building up. The talk was an overview of the history of RAID-Z, the strengths and weaknesses that have emerged, and a look towards the challenges ahead for ZFS and RAID with some possible solutions and mitigating factors. Thanks to Nexenta for putting the conference together; questions or comments are very welcome.

I had the chance to speak at the OpenStorage Summit a couple of weeks ago about RAID-Z (the ZFS implementation of RAID). The talk was an accumulation of blog posts and articles written by me and others as well as quite a bit of new material that’s been building up. The talk was an overview of the history of RAID-Z, the strengths and weaknesses that have emerged, and a look towards the challenges ahead for ZFS and RAID with some possible solutions and mitigating factors. Thanks to Nexenta for putting the conference together; questions or comments are very welcome.

Category: ZFS

The mission of ZFS was to simplify storage and to construct an enterprise level of quality from volume components by building smarter software — indeed that notion is at the heart of the 7000 series. An important piece of that puzzle was eliminating the expensive RAID card used in traditional storage and replacing it with high performance, software RAID. To that end, Jeff invented RAID-Z; it’s key innovation over other software RAID techniques was to close the “RAID-5 write hole” by using variable width stripes. RAID-Z, however, is definitely not RAID-5 despite that being the most common comparison.

RAID levels

Last year I wrote about the need for triple-parity RAID, and in that article I summarized the various RAID levels as enumerated by Gibson, Katz, and Patterson, along with Peter Chen, Edward Lee, and myself:

- RAID-0 Data is striped across devices for maximal write performance. It is an outlier among the other RAID levels as it provides no actual data protection.

- RAID-1 Disks are organized into mirrored pairs and data is duplicated on both halves of the mirror. This is typically the highest-performing RAID level, but at the expense of lower usable capacity.

- RAID-2 Data is protected by memory-style ECC (error correcting codes). The number of parity disks required is proportional to the log of the number of data disks.

- RAID-3 Protection is provided against the failure of any disk in a group of N+1 by carving up blocks and spreading them across the disks — bitwise parity. Parity resides on a single disk.

- RAID-4 A group of N+1 disks is maintained such that the loss of any one disk would not result in data loss. A single disks is designated as the dedicated parity disk. Not all disks participate in reads (the dedicated parity disk is not read except in the case of a failure). Typically parity is computed simply as the bitwise XOR of the other blocks in the row.

- RAID-5 N+1 redundancy as with RAID-4, but with distributed parity so that all disks participate equally in reads.

- RAID-6 This is like RAID-5, but employs two parity blocks, P and Q, for each logical row of N+2 disk blocks.

- RAID-7 Generalized M+N RAID with M data disks protected by N parity disks (without specifications regarding layout, parity distribution, etc).

RAID-Z: RAID-5 or RAID-3?

Initially, ZFS supported just one parity disk (raidz1), and later added two (raidz2) and then three (raidz3) parity disks. But raidz1 is not RAID-5, and raidz2 is not RAID-6. RAID-Z avoids the RAID-5 write hole by distributing logical blocks among disks whereas RAID-5 aggregates unrelated blocks into fixed-width stripes protected by a parity block. This actually means that RAID-Z is far more similar to RAID-3 where blocks are carved up and distributed among the disks; whereas RAID-5 puts a single block on a single disk, RAID-Z and RAID-3 must access all disks to read a single block thus reducing the effective IOPS.

RAID-Z takes a significant step forward by enabling software RAID, but at the cost of backtracking on the evolutionary hierarchy of RAID. Now with advances like flash pools and the Hybrid Storage Pool, the IOPS from a single disk may be of less importance. But a RAID variant that shuns specialized hardware like RAID-Z and yet is economical with disk IOPS like RAID-5 would be a significant advancement for ZFS.

A key component of the ZFS Hybrid Storage Pool is Logzilla, a very fast device to accelerate synchronous writes. This component hides the write latency of disks to enable the use of economical, high-capacity drives. In the Sun Storage 7000 series, we use some very fast SAS and SATA SSDs from STEC as our Logzilla &mdash the devices are great and STEC continues to be a terrific partner. The most important attribute of a good Logzilla device is that it have very low latency for sequential, uncached writes. The STEC part gives us about 100μs latency for a 4KB write — much much lower than most SSDs. Using SAS-attached SSDs rather than the more traditional PCI-attached, non-volatile DRAM enables a much simpler and more reliable clustering solution since the intent-log devices are accessible to both nodes in the cluster, but SAS is much slower than PCIe…

DDRdrive X1

Christopher George, CTO of DDRdrive was kind enough to provide me with a sample of the X1, a 4GB NV-DRAM card with flash as a backing store. The card contains 4 DIMM slots populated with 1GB DIMMs; it’s a full-height card which limits its use in Sun/Oracle systems (typically half-height only), but there are many systems that can accommodate the card. The X1 employs a novel backup power solution; our Logzilla used in the 7000 series protects its DRAM write cache with a large super-capacitor, and many NV-DRAM cards use a battery. Supercaps can be limiting because of their physical size, and batteries have a host of problems including leaking and exploding. Instead, the DDRdrive solution puts a DC power connector on the PCIe faceplate and relies on an external source of backup power (a UPS for example).

Performance

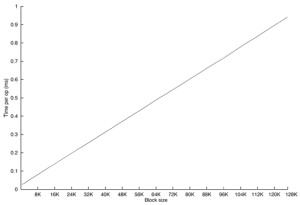

I put the DDRdrive X1 in our fastest prototype system to see how it performed. A 4K write takes about 51μs — better than our SAS Logzilla — but the SSD outperformed the X1 at transfer sizes over 32KB. The performance results on the X1 are already quite impressive, and since I ran those tests the firmware and driver have undergone several revisions to improve performance even more.

As a Logzilla

While the 7000 series won’t be employing the X1, uses of ZFS that don’t involve clustering and for which external backup power is an option, the X1 is a great and economical Logzilla accelerator. Many users of ZFS have already started hunting for accelerators, and have tested out a wide array of SSDs. The X1 is a far more targeted solution, and is a compelling option. And if write performance has been a limiting factor in deploying ZFS, the X1 is a good reason to give ZFS another look.

Double-parity RAID, or RAID-6, is the de facto industry standard for storage; when I started talking about triple-parity RAID for ZFS earlier this year, the need wasn’t always immediately obvious. Double-parity RAID, of course, provides protection from up to two failures (data corruption or the whole drive) within a RAID stripe. The necessity of triple-parity RAID arises from the observation that while hard drive capacity has roughly followed Kryder’s law, doubling annually, hard drive throughput has improved far more modestly. Accordingly, the time to populate a replacement drive in a RAID stripe is increasing rapidly. Today, a 1TB SAS drive takes about 4 hours to fill at its theoretical peak throughput; in a real-world environment that number can easily double, and 2TB and 3TB drives expected this year and next won’t move data much faster. Those long periods spent in a degraded state increase the exposure to the bit errors and other drive failures that would in turn lead to data loss. The industry moved to double-parity RAID because one parity disk was insufficient; longer resilver times mean that we’re spending more and more time back at single-parity. From that it was obvious that double-parity will soon become insufficient. (I’m working on an article that examines these phenomena quantitatively so stay tuned… update Dec 21, 2009: you can find the article here)

Last week I integrated triple-parity RAID into ZFS. You can take a look at the implementation and the details of the algorithm here, but rather than describing the specifics, I wanted to describe its genesis. For double-parity RAID-Z, we drew on the work of Peter Anvin which was also the basis of RAID-6 in Linux. This work was more or less a tutorial for systems programers, simplifying some of the more subtle underlying mathematics with an eye towards optimization. While a systems programmer by trade, I have a background in mathematics so was interested to understand the foundational work. James S. Plank’s paper A Tutorial on Reed-Solomon Coding for Fault-Tolerance in RAID-like Systems describes a technique for generalized N+M RAID. Not only was it simple to implement, but it could easily be made to perform well. I struggled for far too long trying to make the code work before discovering trivial flaws with the math itself. A bit more digging revealed that the author himself had published Note: Correction to the 1997 Tutorial on Reed-Solomon Coding 8 years later addressing those same flaws.

Predictably, the mathematically accurate version was far harder to optimize, stifling my enthusiasm for the generalized case. My more serious concern was that the double-parity RAID-Z code suffered some similar systemic flaw. This fear was quickly assuaged as I verified that the RAID-6 algorithm was sound. Further, from this investigation I was able to find a related method for doing triple-parity RAID-Z that was nearly as simple as its double-parity cousin. The math is a bit dense; but the key observation was that given that 3 is the smallest factor of 255 (the largest value representable by an unsigned byte) it was possible to find exactly of 3 different seed or generator values after which there were collections of failures that formed uncorrectable singularities. Using that technique I was able to implement a triple-parity RAID-Z scheme that performed nearly as well as the double-parity version.

As far as generic N-way RAID-Z goes, it’s still something I’d like to add to ZFS. Triple-parity will suffice for quite a while, but we may want more parity sooner for a variety of reasons. Plank’s revised algorithm is an excellent start. The test will be if it can be made to perform well enough or if some new clever algorithm will need to be devised. Now, as for what to call these additional RAID levels, I’m not sure. RAID-7 or RAID-8 seem a bit ridiculous and RAID-TP and RAID-QP aren’t any better. Fortunately, in ZFS triple-parity RAID is just raidz3.

A little over three years ago, I integrated double-parity RAID-Z into ZFS, a feature expected of enterprise class storage. This was in the early days of Fishworks when much of our focus was on addressing functional gaps. The move to triple-parity RAID-Z comes in the wake of a number of our unique advancements to the state of the art such as DTrace-powered Analytics and the Hybrid Storage Pool as the Sun Storage 7000 series products meet and exceed the standards set by the industry. Triple-parity RAID-Z will, of course, be a feature included in the next major software update for the 7000 series (2009.Q3).

I was having a conversation with an OpenBSD user and developer the other day, and he mentioned some ongoing work in the community to consolidate support for RAID controllers. The problem, he was saying, was that each controller had a different administrative model and utility — but all I could think was that the real problem was the presence of a RAID controller in the first place! As far as I’m concerned, ZFS and RAID-Z have obviated the need for hardware RAID controllers.

ZFS users seem to love RAID-Z, but a frustratingly frequent request is to be able to expand the width of a RAID-Z stripe. While the ZFS community may care about solving this problem, it’s not the highest priority for Sun’s customers and, therefore, for the ZFS team. It’s common for a home user to want to increase his total storage capacity by a disk or two at a time, but enterprise customers typically want to grow by multiple terabytes at once so adding on a new RAID-Z stripe isn’t an issue. When the request has come up on the ZFS discussion list, we have, perhaps unhelpfully, pointed out that the code is all open source and ready for that contribution. Partly, it’s because we don’t have time to do it ourselves, but also because it’s a tricky problem and we weren’t sure how to solve it.

Jeff Bonwick did a great job explaining how RAID-Z works, so I won’t go into it too much here, but the structure of RAID-Z makes it a bit trickier to expand than other RAID implementations. On a typical RAID with N+M disks, N data sectors will be written with M parity sectors. Those N data sectors may contain unrelated data so adding modifying data on just one disk involves reading the data off that disk and updating both those data and the parity data. Expanding a RAID stripe in such a scheme is as simple as adding a new disk and updating the parity (if necessary). With RAID-Z, blocks are never rewritten in place, and there may be multiple logical RAID stripes (and multiple parity sectors) in a given row; we therefore can’t expand the stripe nearly as easily.

A couple of weeks ago, I had lunch with Matt Ahrens to come up with a mechanism for expanding RAID-Z stripes — we were both tired of having to deflect reasonable requests from users — and, lo and behold, we figured out a viable technique that shouldn’t be very tricky to implement. While Sun still has no plans to allocate resources to the problem, this roadmap should lend credence to the suggestion that someone in the community might work on the problem.

The rest of this post will discuss the implementation of expandable RAID-Z; it’s not intended for casual users of ZFS, and there are no alchemic secrets buried in the details. It would probably be useful to familiarize yourself with the basic structure of ZFS, space maps (totally cool by the way), and the code for RAID-Z.

Dynamic Geometry

ZFS uses vdevs — virtual devices — to store data. A vdev may correspond to a disk or a file, or it may be an aggregate such as a mirror or RAID-Z. Currently the RAID-Z vdev determines the stripe width from the number of child vdevs. To allow for RAID-Z expansion, the geometry would need to be a more dynamic property. The storage pool code that uses the vdev would need to determine the geometry for the current block and then pass that as a parameter to the various vdev functions.

There are two ways to record the geometry. The simplest is to use the GRID bits (an 8 bit field) in the DVA (Device Virtual Address) which have already been set aside, but are currently unused. In this case, the vdev would need to have a new callback to set the contents of the GRID bits, and then a parameter to several of its other functions to pass in the GRID bits to indicate the geometry of the vdev when the block was written. An alternative approach suggested by Jeff and Bill Moore is something they call time-dependent geometry. The basic idea is that we store a record each time the geometry of a vdev is modified and then use the creation time for a block to infer the geometry to pass to the vdev. This has the advantage of conserving precious bits in the fixed-width DVA (though at 128 bits its still quite big), but it is a bit more complex since it would require essentially new metadata hanging off each RAID-Z vdev.

Metaslab Folding

When the user requests a RAID-Z vdev be expanded (via an existing or new zpool(1M) command-line option) we’ll apply a new fold operation to the space map for each metaslab. This transformation will take into account the space we’re about to add with the new devices. Each range [a, b] under a fold from width n to width m will become

[ m * (a / n) + (a % n), m * (b / n) + b % n ]

The alternative would have been to account for m – n free blocks at the end of every stripe, but that would have been overly onerous both in terms of processing and in terms of bookkeeping. For space maps that are resident, we can simply perform the operation on the AVL tree by iterating over each node and applying the necessary transformation. For space maps which aren’t in core, we can do something rather clever: by taking advantage of the log structure, we can simply append a new type of space map entry that indicates that this operation should be applied. Today we have allocated, free, and debug; this would add fold as an additional operation. We’d apply that fold operation to each of the 200 or so space maps for the given vdev. Alternatively, using the idea of time-dependent geometry above, we could simply append a marker to the space map and access the geometry from that repository.

Normally, we only rewrite the space map if the on-disk, log-structure is twice as large as necessary. I’d argue that the fold operation should always trigger a rewrite since processing it always requires a O(n) operation, but that’s really an ancillary point.

vdev Update

At the same time as the previous operation, the vdev metadata will need to be updated to reflect the additional device. This is mostly just bookkeeping, and a matter of chasing down the relevant code paths to modify and augment.

Scrub

With the steps above, we’re actually done for some definition since new data will spread be written in stripes that include the newly added device. The problem is that extant data will still be stored in the old geometry and most of the capacity of the new device will be inaccessible. The solution to this is to scrub the data reading off every block and rewriting it to a new location. Currently this isn’t possible on ZFS, but Matt and Mark Maybee have been working on something they call block pointer rewrite which is needed to solve a variety of other problems and nicely completes this solution as well.

That’s It

After Matt and I had finished thinking this through, I think we were both pleased by the relative simplicity of the solution. That’s not to say that implementing it is going to be easy — there’s still plenty of gaps to fill in — but the basic algorithm is sound. A nice property that falls out is that in addition to changing the number of data disks, it would also be possible to use the same mechanism to add an additional parity disk to go from singl

e- to double-parity RAID-Z — another common request.

So I can now extend a slightly more welcoming invitation to the ZFS community to engage on this problem and contribute in a very concrete way. I’ve posted some diffs which I used sketch out some ideas; that might be a useful place to start. If anyone would like to create a project on OpenSolaris.org to host any ongoing work, I’d be happy to help set that up.

The other day I posted about a prototype I had created that adds a gzip compression algorithm to ZFS. ZFS already allows administrators to choose to compress filesystems using the LZJB compression algorithm. This prototype introduced a more effective — albeit more computationally expensive — alternative based on zlib.

As an arbitrary measure, I used tar(1) to create and expand archives of an ON (Solaris kernel) source tree on ZFS filesystems compressed with lzjb and gzip algorithms as well as on an uncompressed ZFS filesystem for reference:

Thanks for the feedback. I was curious if people would find this interesting and they do. As a result, I’ve decided to polish this wad up and integrate it into Solaris. I like Robert Milkowski’s recommendation of options for different gzip levels, so I’ll be implementing that. I’ll also upgrade the kernel’s version of zlib from 1.1.4 to 1.2.3 (the latest) for some compression performance improvements. I’ve decided (with some hand-wringing) to succumb to the requests for me to make these code modifications available. This is not production quality. If anything goes wrong it’s completely your problem/fault — don’t make me regret this. Without further disclaimer: pdf patch

In reply to some of the comments:

UX-admin One could choose between lzjb for day-to-day use, or bzip2 for heavily compressed, “archival” file systems (as we all know, bzip2 beats the living daylights out of gzip in terms of compression about 95-98% of the time).

It may be that bzip2 is a better algorithm, but we already have (and need zlib) in the kernel, and I’m loath to add another algorithm

ivanvdb25 Hi, I was just wondering if the gzip compression has been enabled, does it give problems when an ZFS volume is created on an X86 system and afterwards imported on a Sun Sparc?

That isn’t a problem. Data can be moved from one architecture to another (and I’ll be verifying that before I putback).

dennis Are there any documents somewhere explaining the hooks of zfs and how to add features like this to zfs? Would be useful for developers who want to add features like filesystem-based encryption to it. Thanks for your great work!

There aren’t any documents exactly like that, but there’s plenty of documentation in the code itself — that’s how I figured it out, and it wasn’t too bad. The ZFS source tour will probably be helpful for figuring out the big picture.

Update 3/22/2007: This work was integrated into build 62 of onnv.

Technorati Tags: ZFS OpenSolaris

Recent Posts

April 17, 2024

January 22, 2024

January 13, 2024

December 29, 2023

February 12, 2017

December 18, 2016

Archives

Archives

- April 2024 (1)

- January 2024 (2)

- December 2023 (1)

- February 2017 (1)

- December 2016 (1)

- August 2016 (2)

- June 2016 (7)

- May 2016 (1)

- March 2016 (1)

- November 2015 (2)

- September 2015 (1)

- June 2015 (1)

- March 2015 (1)

- December 2014 (2)

- August 2014 (1)

- June 2014 (2)

- February 2014 (2)

- December 2013 (1)

- September 2013 (2)

- August 2013 (1)

- May 2013 (1)

- February 2013 (2)

- December 2012 (1)

- November 2012 (2)

- October 2012 (1)

- September 2012 (1)

- July 2012 (1)

- April 2012 (2)

- February 2012 (3)

- January 2012 (1)

- December 2011 (1)

- November 2011 (1)

- October 2011 (4)

- September 2011 (1)

- July 2011 (1)

- June 2011 (1)

- May 2011 (2)

- November 2010 (4)

- September 2010 (1)

- August 2010 (5)

- July 2010 (2)

- March 2010 (1)

- December 2009 (2)

- September 2009 (1)

- August 2009 (1)

- July 2009 (1)

- May 2009 (3)

- April 2009 (1)

- March 2009 (4)

- February 2009 (3)

- December 2008 (1)

- November 2008 (2)

- October 2008 (2)

- August 2008 (1)

- July 2008 (2)

- June 2008 (2)

- May 2008 (1)

- April 2008 (2)

- March 2008 (1)

- January 2008 (1)

- October 2007 (1)

- August 2007 (2)

- July 2007 (3)

- May 2007 (1)

- March 2007 (2)

- January 2007 (2)

- December 2006 (1)

- November 2006 (1)

- September 2006 (2)

- August 2006 (1)

- June 2006 (2)

- May 2006 (4)

- February 2006 (2)

- December 2005 (1)

- November 2005 (1)

- October 2005 (5)

- September 2005 (1)

- August 2005 (3)

- July 2005 (2)

- June 2005 (7)

- May 2005 (2)

- April 2005 (2)

- March 2005 (2)

- February 2005 (1)

- January 2005 (1)

- November 2004 (5)

- October 2004 (5)

- August 2004 (6)

- July 2004 (21)

- June 2004 (5)